Introduction

Artificial intelligence (AI) continues to evolve rapidly, with Liquid Foundation Models (LFMs) setting new standards in efficiency, adaptability, and scalability in AI technology. Developed by Liquid AI, LFMs represent a new breed of AI models that focus on real-time adaptability and efficient handling of diverse data types, setting them apart from traditional transformer models. In this article, we will explore what makes LFMs unique, their applications, and how they are reshaping the AI landscape. We’ll also delve into their potential impact on various industries, future advancements, and how to get started with these cutting-edge models. We’ll also delve into their potential impact on various industries, future advancements, and how to get started with these cutting-edge models.

What are Liquid Foundation Models?

Liquid Foundation Models are advanced generative AI models. They are based on Liquid Neural Networks (LNN), a novel neural network architecture inspired by the brain’s ability to adapt and learn continuously. Unlike traditional transformer-based models, LFMs excel in processing sequential data such as text, audio, video, and biological signals with high efficiency. This adaptability allows LFMs to adjust in real-time during inference, making them ideal for applications that require fast and dynamic responses, such as autonomous vehicle navigation, where split-second decisions are critical for safety.

The architecture of LFMs is based on principles from dynamical systems, signal processing, and linear algebra. These principles are crucial for enabling LFMs to process complex sequences efficiently, model dynamic changes over time, and optimize computational performance, which makes them well-suited for handling diverse and evolving data types. This unique combination enables LFMs to offer significantly lower memory usage and higher computational efficiency compared to traditional models. This is particularly important when dealing with long sequences of data. The efficiency gains make LFMs particularly useful in environments where computational resources are limited, allowing them to be deployed in both edge and cloud-based settings.

Why Liquid Neural Networks?

Liquid Neural Networks are at the core of what makes LFMs stand out. Inspired by the brain’s plasticity in learning, LNNs can dynamically reconfigure themselves as they receive new inputs. This characteristic allows LFMs to maintain flexibility in learning and adapting to new data over time, which contrasts sharply with traditional AI models that often require retraining from scratch to adapt to new data. This makes LFMs highly efficient in handling dynamic data environments, such as conversational AI, real-time monitoring, and predictive analytics.

Key Features of Liquid Foundation Models

1. Efficiency and Performance

LFMs are engineered to minimize memory usage while providing powerful computational capabilities. This is particularly beneficial for handling large context lengths and long sequential data, which would require substantial resources if processed by conventional transformer-based models. Moreover, the reduced computational overhead ensures that LFMs can be deployed even on devices with limited hardware capabilities, such as smartphones or IoT devices. This opens up new opportunities for AI-powered applications that need to run locally without relying on cloud services.

2. Real-Time Adaptability

A key advantage of LFMs is their ability to adapt in real-time during inference. This means that LFMs can modify their behavior as they process information, making them well-suited for dynamic tasks, such as conversational AI, where responsiveness is crucial. Real-time adaptability also enhances their effectiveness in fields like autonomous vehicles, where rapid decision-making is essential. The capacity to adjust instantly based on new input data allows LFMs to outperform more rigid models that require extensive retraining for every slight modification in the dataset.

3. System Dynamics-Based Architecture

By leveraging system dynamics and signal processing, LFMs are capable of processing data more efficiently than traditional models. This architecture also facilitates scalability, which means LFMs can perform complex tasks without significantly impacting memory requirements. The system dynamics-based approach also allows LFMs to predict long-term dependencies more effectively than transformer-based models, which makes them highly suitable for time-series forecasting, predictive maintenance, and other applications involving sequential dependencies. This scalability means LFMs can be trained and deployed across a wide variety of tasks, from simple data classification to complex pattern recognition.

4. Scalable Across Multiple Domains

Another significant feature of LFMs is their scalability across multiple domains. Whether it’s natural language processing, computer vision, or biological signal analysis, LFMs have shown the ability to handle different types of data effectively. This versatility allows developers to use the same underlying architecture for multiple applications, reducing the cost and complexity of deploying AI solutions in diverse environments. The scalability also ensures that LFMs can grow in complexity to meet the demands of increasingly sophisticated tasks while maintaining efficiency.

Comparison with Traditional AI Models

The table below highlights some of the key differences between LFMs and traditional transformer-based models:

| Feature | Liquid Foundation Models (LFMs) | Traditional Transformer-Based Models |

|---|---|---|

| Memory Usage | Low | High |

| Computational Efficiency | High | Variable |

| Architecture | System Dynamics-Based | Transformer-Based |

| Scalability | High | Limited by Memory |

In comparison to traditional AI models, LFMs provide a balance of high computational efficiency and low memory usage. This makes them particularly attractive for edge AI deployments where resources are constrained, yet high performance is required. The system dynamics-based architecture not only facilitates scalability but also enables LFMs to process larger amounts of data in real-time without compromising accuracy.

Applications of Liquid Foundation Models

Due to their versatility and efficiency, LFMs have a wide range of applications across different domains:

- Natural Language Processing (NLP): LFMs are capable of processing large amounts of text in real-time, making them suitable for tasks such as sentiment analysis, machine translation, and text generation. They are particularly useful for applications that require quick turnaround times, such as customer service bots and live chat support.

- Image and Video Recognition: LFMs can efficiently analyze images and videos, which makes them valuable for applications in surveillance systems, medical image analysis, and automatic image classification. Their ability to scale while maintaining low memory usage ensures that even high-resolution video streams can be processed without significant hardware requirements.

- Audio Analysis: These models are well-equipped to perform speech recognition and music analysis, enabling better voice assistants and audio-based applications. LFMs excel at capturing nuances in speech, which improves the accuracy of voice-based interfaces and can lead to more natural-sounding responses.

- Biological Signal Processing: Thanks to their ability to process sequential signals, LFMs are also applicable in healthcare, particularly for analyzing data like ECG or EEG, aiding in more precise diagnostics. By efficiently analyzing these signals, LFMs can assist doctors in identifying potential health issues faster and more accurately, which could significantly improve patient outcomes.

- Autonomous Systems: LFMs are also well-suited for autonomous systems, such as self-driving cars and drones, due to their real-time adaptability. Their capacity to adjust to new data instantly makes them ideal for navigation, obstacle avoidance, and decision-making in dynamic environments.

Future Developments of Liquid Foundation Models

Liquid AI has ambitious plans to expand the capabilities of its models further by optimizing them for different hardware platforms, including NVIDIA, AMD, Qualcomm, and Apple. These platforms are significant because they represent a wide spectrum of computing environments, from powerful GPUs for data centers (NVIDIA) to efficient mobile processors (Qualcomm and Apple). This ensures that LFMs can be deployed effectively across diverse devices, enhancing accessibility and performance. Optimizing for multiple platforms is crucial to ensure that LFMs are accessible and efficient across diverse computing environments, from high-performance servers to mobile and edge devices, broadening their applicability and impact. For more details, visit Liquid AI’s official page. This will broaden the scope of LFMs, making them accessible across various devices and environments—from powerful servers to edge devices. This cross-platform optimization aims to democratize AI, enabling more industries and developers to leverage advanced AI without needing specialized hardware.

Moreover, Liquid AI is working on increasing the number of parameters in their models, enhancing the ability to tackle increasingly complex tasks. With larger parameter counts, LFMs like the LFM-40B (featuring 40.3 billion parameters) can handle highly sophisticated AI challenges while still maintaining high computational efficiency. Increasing the parameter count also improves the model’s understanding of nuances in data, leading to more accurate predictions and better decision-making capabilities. For more technical details, check out Liquid AI’s research. The development roadmap also includes integrating new methodologies for training, such as distributed learning and active learning, to further reduce training time and improve model efficiency.

Notable Liquid Foundation Models

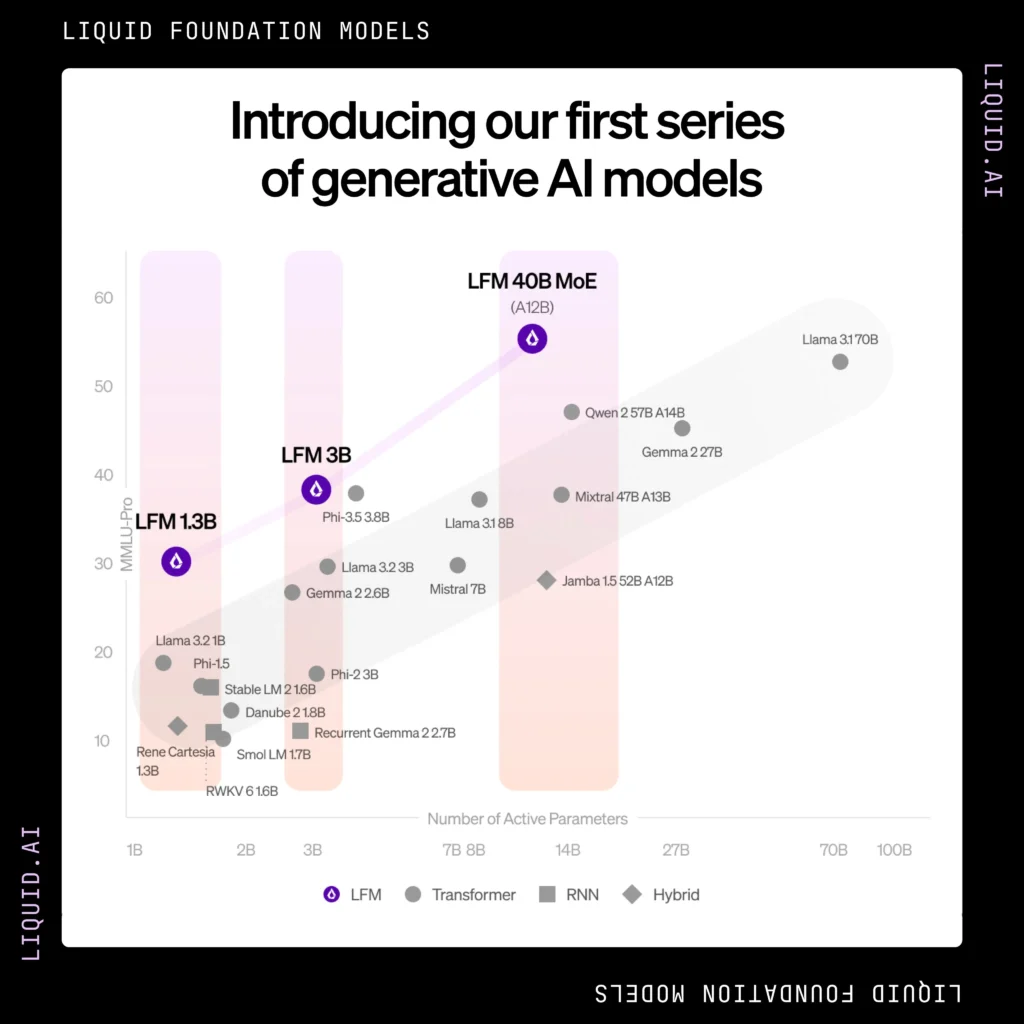

Liquid AI has developed three main LFM models, each tailored to different use cases:

- LFM-1B: This model contains 1.3 billion parameters and is optimized for resource-constrained environments. It is ideal for basic AI tasks such as classification and simple predictive analytics, especially in environments with limited computational power.

- LFM-3B: With 3.1 billion parameters, this model is ideal for edge deployments, such as mobile applications. Its balance of parameter count and computational efficiency makes it suitable for real-time applications, such as augmented reality (AR) and mobile games that require on-device AI.

- LFM-40B: The most advanced model in the series, featuring 40.3 billion parameters and a Mixture of Experts (MoE) architecture, designed for handling complex and demanding tasks. This model is perfect for enterprise-grade AI solutions, such as large-scale data analytics, financial modeling, and autonomous vehicle systems, where high performance and adaptability are critical.

How to Get Started with LFMs

If you’re interested in trying out LFMs, you can do so through platforms like Liquid Playground or Lambda. Liquid Playground provides an interactive environment for experimenting with LFMs’ capabilities, allowing developers to quickly test and iterate on their AI solutions without needing to set up complex infrastructure. Meanwhile, Lambda offers cloud-based tools to integrate LFMs into various applications, providing scalable resources that make it easy to deploy these models in production environments. These platforms allow developers and researchers to explore the capabilities of LFMs and experiment with their potential in real-world scenarios, speeding up the development cycle and reducing deployment costs. These platforms allow developers and researchers to explore the capabilities of LFMs and experiment with their potential in real-world scenarios, speeding up the development cycle and reducing deployment costs.

Conclusion

Liquid Foundation Models are redefining the standards for artificial intelligence with their unique architecture and remarkable adaptability. By effectively combining efficiency, scalability, and real-time adaptability, LFMs are exceptionally well-positioned to transform AI across various fields and industries. Their ability to operate seamlessly on a wide range of hardware, process diverse data types efficiently, and adapt in real-time gives them a significant edge over traditional models that often lack such versatility. As Liquid AI continues to enhance these innovative models further, we can expect even more groundbreaking applications that will expand the boundaries of what AI can achieve in meaningful ways. From personalized healthcare solutions to advanced autonomous systems capable of complex tasks, LFMs have the potential to revolutionize how AI is integrated into our daily lives, making advanced capabilities accessible to a broader audience of people and industries alike.